It’s harder to turn off robot begging for its life: Study

Change Size

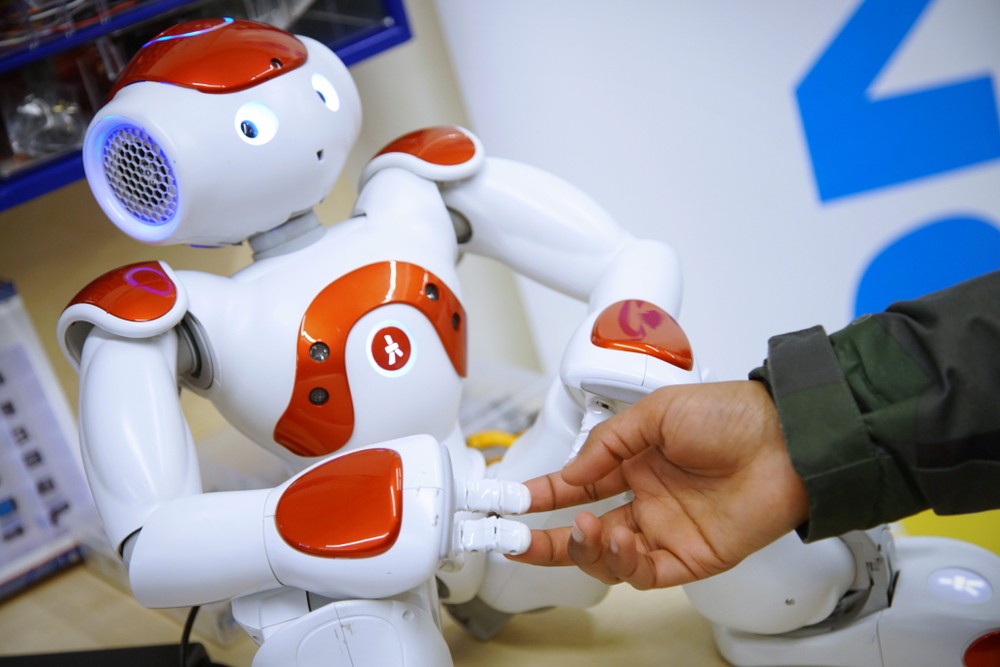

A Nao robot, the same model used by German researchers in an experiment which found out that turning off a robot became harder when it begged human subjects not to. (Shutterstock/MikeDotta)

A Nao robot, the same model used by German researchers in an experiment which found out that turning off a robot became harder when it begged human subjects not to. (Shutterstock/MikeDotta)

German researchers found that turning off a robot became harder when it begged human subjects not to.

With the fast rise of artificial intelligence and its increasing adoption into everyday products, it somehow makes sense to ask just how interactive technology will be in the future, and how humans will behave in the face of a highly interactive robot.

Researchers from the University of Duisburg-Essen put this to the test and observed how a human would react if a robot begged not to be turned off. Their study has been published online on open access journal PLOS One.

The researchers disguised their study by telling 89 volunteers that they were simply to perform several tasks with Nao, a small humanoid robot. Once the tasks were done, participants were asked to switch off Nao.

Results showed roughly half of the participants had misgivings in turning off Nao when it started to plead for its life. Nao would say phrases like “No! Please do not switch me off!” and expressed a fear of being powered down. While the participants eventually did switch Nao off, 30 of the volunteers struggled morally if they should flip the Off switch.

When asked later as to why they struggled, the most common response was that the robot asked them not to. Other reasons included surprise at the sudden pleas and fear that they were doing something wrong with the experiment.

Read also: New 'emotional' robots aim to read human feelings

The researchers attributed the volunteers’ response to the theory called “the media equation”. The theory suggested that humans tend to treat non-human media (gadgets, robots, computers) as if they were human. The concept called the “rule of reciprocity” also came into play. Because Nao started to sound human when it begged for its life, the volunteers started to treat it like a person. This led to their hesitation in turning Nao off.

A similar study was conducted in 2007, which included video footage of the interaction between human and robot. In the footage, the researcher warned the robot that it was being turned off because it made a mistake. The robot proceeded to beg not to be turned off and even promised to behave.

How do the findings apply to modern society? Aike Horstmann, a PhD student at the University of Duisburg-Essen and who also led the study, told The Verge that it is something humans will need to get used to eventually.

The media equation theory suggests we react to [robots] socially because for hundreds of thousands of years, we were the only social beings on the planet. Now we’re not, and we have to adapt to it. It’s an unconscious reaction, but it can change,” said Hortsmann.

So if Siri, Cortana or the Google assistant suddenly begs not to turn a device off, humans need to understand who’s actually in charge and just press that power button.

This article appeared on the Philippine Daily Inquirer newspaper website, which is a member of Asia News Network and a media partner of The Jakarta Post