Popular Reads

Top Results

Can't find what you're looking for?

View all search resultsPopular Reads

Top Results

Can't find what you're looking for?

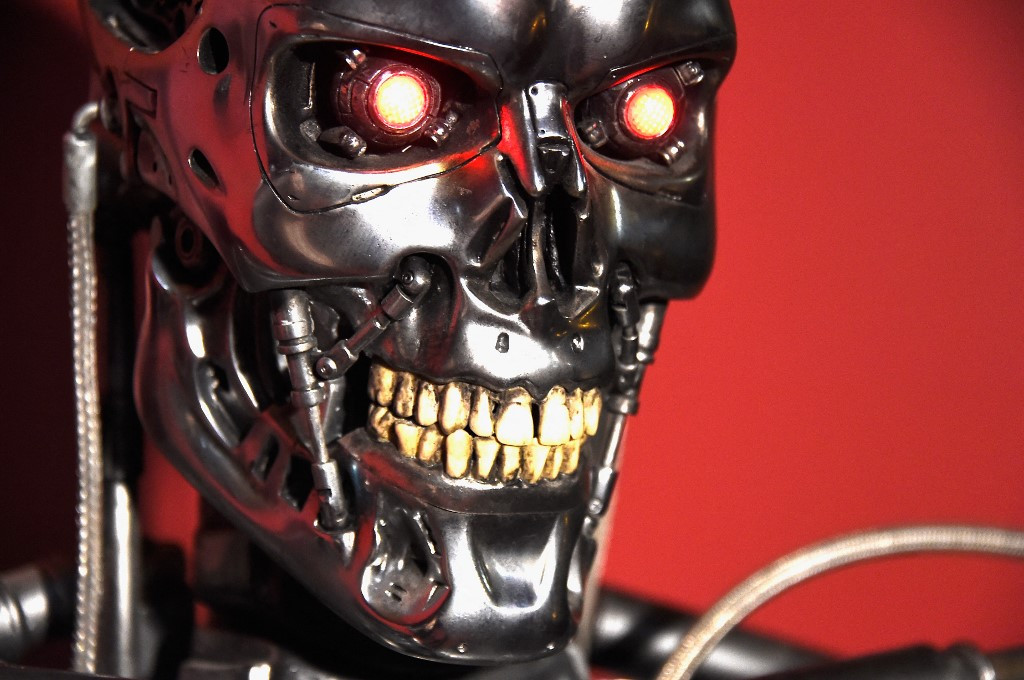

View all search resultsIt's (not) alive! Google row exposes AI troubles

The Silicon Valley giant suspended one of its engineers last week who argued the firm's AI system LaMDA seemed "sentient," a claim Google officially disagrees with.

Change text size

Gift Premium Articles

to Anyone

A

n internal fight over whether Google built technology with human-like consciousness has spilled into the open, exposing the ambitions and risks inherent in artificial intelligence that can feel all too real.

The Silicon Valley giant suspended one of its engineers last week who argued the firm's AI system LaMDA seemed "sentient," a claim Google officially disagrees with.

Several experts told AFP they were also highly skeptical of the consciousness claim, but said human nature and ambition could easily confuse the issue.

"The problem is that... when we encounter strings of words that belong to the languages we speak, we make sense of them," said Emily M. Bender, a linguistics professor at University of Washington.

"We are doing the work of imagining a mind that's not there," she added.

LaMDA is a massively powerful system that uses advanced models and training on over 1.5 trillion words to be able to mimic how people communicate in written chats.

The system was built on a model that observes how words relate to one another and then predicts what words it thinks will come next in a sentence or paragraph, according to Google's explanation.